What happens when AI generates false facts, biased results—or worse?

As generative AI systems like ChatGPT, Claude, and Gemini continue to redefine productivity across industries, they also bring a new set of challenges. While these AI tools are powerful, their outputs aren’t always accurate, safe, or ethically sound.

From misquoting sources to producing offensive or misleading content, uncontrolled AI responses pose real-world risks—ranging from damaged brand reputation to serious legal consequences. With companies and developers racing to integrate AI into workflows, controlling its output has become more than a technical concern—it’s a business and ethical imperative.

In this article, we’ll break down why is controlling the output of generative ai systems important.

Let’s explore how to keep AI powerful and accountable.

What Are Generative AI Systems?

Generative AI systems are machine learning models trained to produce original content. Unlike traditional algorithms that follow strict, rule-based outputs, generative models like GPT-4, DALL·E, and Stable Diffusion can create human-like text, realistic images, code snippets, music, and more—all from simple prompts.

These systems rely on large-scale data and deep learning architectures to generate responses based on learned patterns, not direct facts. As a result, their outputs often feel natural and insightful—but they’re not infallible.

Common Types of Outputs: Text, Images, Code, Audio

Generative AI systems are now capable of producing content across multiple domains:

- Text: Emails, articles, essays, scripts, chat responses (e.g., ChatGPT, Claude)

- Images: AI-generated art, product mockups, and concept visuals (e.g., Midjourney, DALL·E)

- Code: Autocomplete suggestions, bug fixes, entire scripts (e.g., GitHub Copilot, Replit Ghostwriter)

- Audio: Voice synthesis, music generation, podcasts (e.g., ElevenLabs, Suno)

These outputs are powerful for productivity—but each carries potential risks if left unchecked.

Why AI Outputs Are Not Always Reliable (Hallucinations & Bias)

Despite their impressive capabilities, generative AI systems are prone to:

- Hallucinations: Fabricating facts or statistics that sound plausible but are completely false.

- Biases: Repeating or amplifying stereotypes and biased perspectives found in training data.

- Inconsistencies: Producing contradictory or irrelevant answers depending on prompt phrasing.

These flaws stem from the underlying training data and lack of real-world understanding. For example, an AI might confidently claim that “New York is the capital of the U.S.” simply because of pattern errors in its dataset.

“The lack of proper oversight in generative AI systems opens the door to everything from bias to legal violations.”

— Margaret Mitchell, Chief Ethics Scientist at Hugging Face

Uncontrolled output isn’t just inconvenient—it can mislead readers, damage trust, and create compliance issues.

Why Output Control Is Critical

As generative AI becomes more embedded in business workflows, marketing, education, and even healthcare, controlling its output is no longer optional—it’s essential. Without proper safeguards, AI-generated content can unintentionally cause harm, violate policies, or erode trust. Let’s explore the core reasons why managing AI outputs is crucial:

· Preventing Harmful or Misleading Content

AI can produce text or visuals that appear factual but are entirely fabricated. These “hallucinations” can spread misinformation, damage reputations, or even pose public safety risks—especially in domains like finance, health, or law.

Up to 20-30% of responses from large language models (LLMs) may contain factual inaccuracies or fabricated information.

— Source: Stanford Center for Research on Foundation Models (2023)

· Reducing Bias and Discrimination

Generative AI is only as unbiased as the data it’s trained on. Left unchecked, outputs can reinforce stereotypes or discriminatory views, potentially leading to reputational fallout or alienating users from marginalized groups.

Over 60% of tested LLMs showed detectable demographic, gender, or political bias in responses.

— Source: MIT Technology Review & AI Fairness 360 (IBM Research)

· Protecting Intellectual Property & Privacy

AI models often draw on vast datasets scraped from the internet, which may include copyrighted materials or private information. Controlling output ensures companies don’t unintentionally publish plagiarized content or leak sensitive data.

· Ensuring Compliance with Legal & Ethical Standards

From GDPR to corporate governance policies, generative AI must align with evolving regulations. Output control helps mitigate legal exposure and supports ethical AI development, especially in highly regulated industries.

How to Control Generative AI Outputs Effectively

As generative AI continues to evolve, proactive output control becomes the foundation of safe, scalable deployment. Whether you’re building your own AI applications or integrating third-party tools, ensuring the accuracy, fairness, and safety of generated content must be a core part of your strategy. Below are key methods and best practices to implement effective AI output control:

Key Risks of Uncontrolled AI Output

1. Real-World Examples of AI Hallucinations

AI models can confidently generate false information—commonly referred to as “hallucinations.”

In 2023, several legal and media cases involved AI-generated citations, news facts, or data points that were entirely fabricated, leading to public backlash or professional consequences.

2. Misinformation and Deepfake Risks

Advanced AI systems can now create realistic images, audio, and videos—making deepfakes indistinguishable from real content.

The number of deepfake videos online doubled every six months from 2022 to 2024.

— Source: Deeptrace Threat Intelligence Report

Without safeguards, generative AI can be exploited to spread false political narratives, impersonate individuals, or mislead the public.

In a 2024 study by NewsGuard, 79% of AI-generated news articles contained false or misleading claims.

— Source: NewsGuard AI Tracker, 2024

3. Corporate and Brand Risk Due to Inaccurate Output

Imagine a product description, financial report, or customer email generated by AI that contains errors or tone-deaf messaging. These small mistakes can erode consumer trust, spark PR crises, or expose the company to legal action—all avoidable with proper output validation.

Only 39% of users trust generative AI to deliver accurate information without human verification.

— Source: Pew Research Center, 2024 Survey on AI and Trust

Techniques for Controlling AI Output

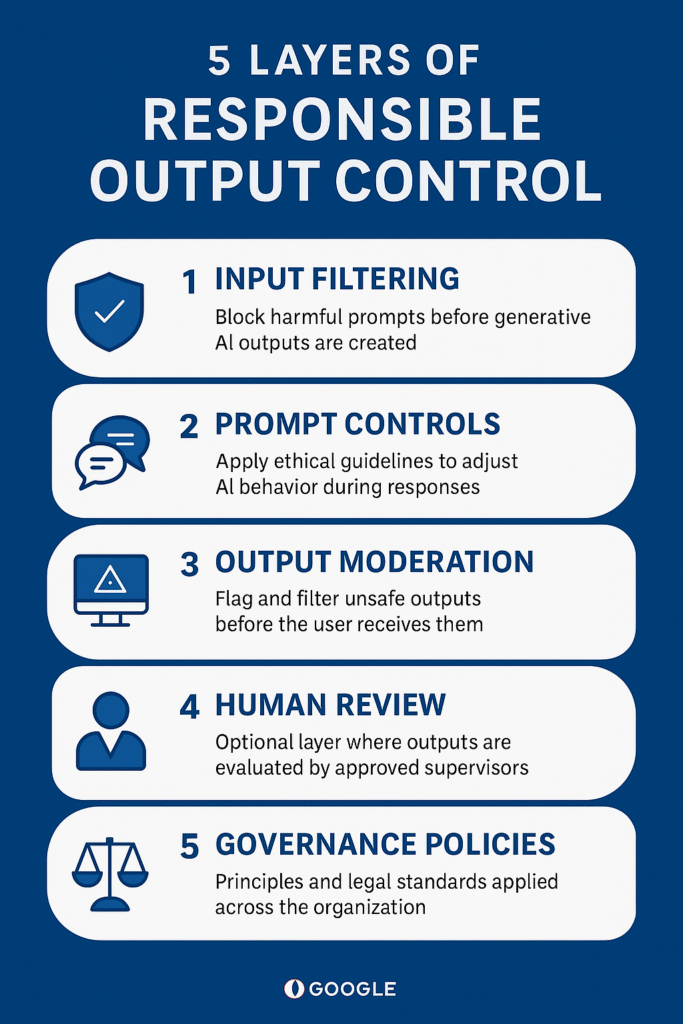

Controlling generative AI isn’t just about preventing harm—it’s about building trustworthy, high-quality systems that deliver accurate and ethical results. Whether you’re deploying AI in content creation, customer support, or enterprise tools, these techniques form the backbone of responsible output management.

· Prompt Engineering and Guardrails

By carefully designing prompts, you can steer AI systems toward more accurate, context-aware responses. Combined with predefined constraints or “guardrails”, prompt engineering helps limit unwanted behavior and reduce hallucinations before the output even begins.

· Post-Processing Filters & Human-in-the-Loop Systems

Once content is generated, post-processing layers can automatically scan for bias, toxicity, or inaccuracies. In sensitive use cases, adding a human-in-the-loop (HITL) ensures critical review, especially for legal, medical, or financial applications.

· Using APIs with Moderation Layers (e.g., OpenAI, Anthropic)

Leading AI platforms now offer built-in content moderation APIs, safety classifiers, and response filtering tools. Leveraging these moderation layers—like OpenAI’s moderation endpoint or Anthropic’s Constitutional AI—can help enforce safe and aligned outputs by default.

· Adversarial Testing & Red-Teaming AI Models

To truly understand how an AI might fail, organizations should employ adversarial testing—intentionally probing for edge cases, biases, or failures. Red-teaming involves simulating malicious use cases to identify vulnerabilities before they go live.

Tools & Frameworks for Responsible Output Management

Managing the output of generative AI systems isn’t just about technology—it’s about trust. Leading AI platforms now include integrated safety, moderation, and governance features to reduce risk and enhance reliability.

Comparative Table of Major AI Platforms & Their Safety Features

| Platform | Output Moderation | Human Review Support | Bias Mitigation | Red-Teaming Tools | API-level Controls |

| OpenAI | Yes (Moderation API) | Optional (via GPT-4 usage) | Yes (prompt filters, content classifiers) | Internal & external red-teaming | Fine-tuning, moderation layers |

| Google AI (Gemini) | Yes (Safety classifier layers) | Yes (Enterprise tools) | Yes (multilingual fairness features) | Yes | API & model-level filtering |

| Meta (LLama) | Limited (community-driven) | Limited (open-source review) | In progress (bias audits) | Yes (via external researchers) | Model-level control only |

| Anthropic (Claude) | Yes (Constitutional AI) | Yes (enterprise-level feedback loop) | Yes (principled alignment model) | Yes | Very strong ethical guardrails |

| Cohere | Customizable | Yes (via Command R+) | Partial (user-defined) | Early stages | Moderate API controls |

Case Studies: Real Consequences of Uncontrolled AI Output

Case Study 1: Chatbot Hallucination in Healthcare

Context:

A well-known health platform integrated a generative AI chatbot to answer basic medical queries.

Incident:

A user inquired about dosage for a specific medication. The AI, without proper output safeguards, hallucinated a dosage that was incorrect and potentially dangerous.

Outcome:

Although the user double-checked with a healthcare provider, the incident raised red flags. The company faced public criticism and paused the rollout.

Key Lesson:

In high-stakes industries like healthcare, human-in-the-loop and domain-specific knowledge enforcement is non-negotiable.

Case Study 2: Brand Backlash from Unfiltered AI Image Generation

Context:

A creative agency used an AI image generator for client campaign drafts without enabling content filters.

Incident:

One generated image included culturally insensitive elements. The agency didn’t catch it before sending mockups to the client.

Outcome:

The client expressed serious concern, ending the contract early. The incident was also shared on social media, affecting the agency’s reputation.

Key Lesson:

Content moderation and brand-safety filters must be part of the AI output workflow, especially in public-facing materials.

Case Study 3: Copyright Violation from AI-Generated Text

Context:

A news site experimented with AI-generated articles using a generative language model for sports reporting.

Incident:

Several AI-generated articles mirrored phrasing and structure from copyrighted news sources—triggering plagiarism detection tools.

Outcome:

The site was served DMCA takedown notices and temporarily lost ad revenue. Legal counsel was engaged to assess liability.

Key Lesson:

Output governance is vital to protect against IP violations. Attribution, citation mechanisms, and post-processing reviews are essential.

Comparison of Output Control Across Leading Generative AI Models

| Feature / Platform | Perplexity AI | ChatGPT (OpenAI) | Claude (Anthropic) | Gemini (Google DeepMind) |

| Content Moderation | Basic filtering | Multi-layer moderation (Trust & Safety) | Constitutional AI for safe outputs | Custom safety filters based on Gemini SDK |

| Bias Mitigation | Minimal | Reinforcement learning + moderation | Explicit focus on ethical reasoning | Trained with ethical data + feedback |

| Human-in-the-Loop | No | Optional in enterprise settings | Integrated into Claude’s framework | Available in enterprise workflows |

| Prompt Safety | No restrictions | Prompt classification & restrictions | Automatically reframes unsafe prompts | Prompt filtering & reformulation |

| Transparency Features | Sources cited | Limited explainability | Clear reasoning chains | Working on transparency toolkit |

| Customization | Not currently | Available with GPT-4 Turbo | Limited customization | Fully customizable with Google Cloud AI |

Unique Insights:

- Perplexity AI excels at citing sources, but lacks deeper safety mechanisms—great for factual queries, less suited for sensitive topics.

- ChatGPT has robust moderation and enterprise tools, but transparency is still evolving.

Thinking of using an AI assistant? This Perplexity vs ChatGPT guide breaks it all down—don’t miss out.- Claude by Anthropic shines in ethical reasoning, using its “Constitutional AI” approach for safer outputs.

- Gemini offers the strongest enterprise-level controls, especially for developers needing API-level moderation and policy compliance.

Emerging Standards in AI Governance

As generative AI becomes central to business and society, international governance frameworks are taking shape:

1. EU AI Act (European Union)

- Classifies AI by risk levels (minimal to unacceptable).

- Requires robust safeguards, documentation, and human oversight for high-risk systems.

- Will be enforceable across all member states—global companies must comply.

2. NIST AI Risk Management Framework (USA)

- Focuses on identifying, measuring, and managing AI risk.

- Emphasizes fairness, transparency, safety, and privacy.

- Provides voluntary guidelines but is expected to influence future U.S. regulation.

Conclusion: Why Output Control in Generative AI Matters

Controlling the output of generative AI systems is no longer optional—it is fundamental for ensuring:

- Trustworthiness in public and enterprise use

- Accuracy in factual and decision-critical applications

- Compliance with legal and regulatory frameworks

- Ethical alignment with societal and organizational values

From hallucinated healthcare advice to biased recruitment tools, the consequences of unchecked outputs are real. But with the right combination of tools, prompt design, moderation layers, and human review, organizations can unlock the full power of AI safely and responsibly.

Ready to implement AI responsibly? Explore the top AI moderation tools now or download our AI Output Control Checklist to get started.

Common Questions About Controlling Generative AI Outputs

Q: Why is it important for humans to have tools to control AI?

Humans need tools to control AI to ensure that its outputs are aligned with human values, laws, and social norms. Without proper control, AI can produce biased, false, or harmful content, posing risks to individuals and institutions alike.

Q: Why is controlling the output of generative AI systems important (brain-style)?

Controlling generative AI is like guiding the brain of a system that lacks human reasoning. These systems generate responses based on patterns—not truth. Without output control, they can create content that’s misleading, unethical, or even dangerous, affecting areas like healthcare, law, finance, and media.

Q: Which of the following is a generative AI application?

A correct example would be:

ChatGPT – it generates human-like text based on prompts.

Q: Why is controlling the output of generative AI systems important (quiz style)?

Correct Answer:

To reduce harm, bias, and ensure compliance with ethical and legal standards.

Q: Choose the Generative AI models for language from the following:

Correct Examples:

- GPT-4 (OpenAI)

- Claude (Anthropic)

- LLaMA (Meta)

- Gemini (Google DeepMind)

Q: What is one major ethical concern in the use of generative AI?

A key ethical concern is bias and discrimination in AI-generated content, which can reinforce social inequalities.

Q: What is one challenge in ensuring fairness in generative AI?

A major challenge is training data bias—AI models often learn from unbalanced or prejudiced datasets, which affects their output.

Q: What is the main goal of generative AI?

The primary goal is to generate new content—text, images, audio, or code—that resembles human-created output based on learned patterns.

Q: Which of the following is a use case for traditional AI?

Correct Use Case:

Fraud detection in banking – this involves pattern recognition, not content generation, making it a classic example of traditional (discriminative) AI.